What’s Your Threat Score?

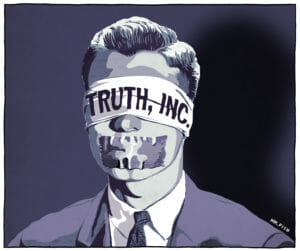

Authorities are harvesting and evaluating your data to calculate your "threat score." rickpilot_2000 / CC BY-SA 2.0

rickpilot_2000 / CC BY-SA 2.0

By Sarah Burris / AlterNetThis piece first appeared at AlterNet.

Police have found a new way to legally incorporate surveillance and profiling into everyday life. Just when you thought we were making progress raising awareness surrounding police brutality, we have something new to contend with. The Police Threat Score isn’t calculated by a racist police officer or a barrel-rolling cop who thinks he’s on a TV drama; it’s a computer algorithm that steals your data and calculates your likelihood of risk and threat for the fuzz.

Beware is the new stats-bank that helps officers analyze “billions of data points, including arrest reports, property records, commercial databases, deep Web searches and…social-media postings” to ultimately come up with a score that indicates a person’s potential for violence, according to a Washington Post story. No word yet on whether this meta data includes photos and facial recognition software. For example would an ordinary person, yet to commit a crime, be flagged when seen wearing a hoodie in a gated Florida community?

The company tries to paint itself as a savior to first responders, claiming they want to help them “understand the nature of the environment they may encounter during the window of a 911 event.” Think of it like someone pulling your credit score when you apply for a job. Except, in this instance you never applied for the job and they’re pulling your credit score anyway because they knew you might apply. It’s that level of creepiness.

Remember the 2002 Tom Cruise movie Minority Report? It’s set in 2054, a futuristic world where the “pre-crime” unit arrests people based on a group of psychics who can see crimes before they happen. Only, it’s 2016 and we’re not using psychics, we’re using computers that mine data. According to the Post piece, law enforcement in Oregon are under federal investigation for using software to monitor Black Lives Matter hashtags after uprisings in Baltimore and Ferguson. How is this new software any different? In fact, this is the same kind of technology the NSA has been using since 9/11 to monitor online activities of suspected terrorists—they’re just bringing it down to the local level.

According to FatalEncounters.org, a site that tracks deaths by cop, there were only 14 days in 2015 in which a law enforcement officer did not kill someone. So, leaving judgment up to the individual hasn’t been all that effective in policing. But is letting a machine do it any better? Using these factors to calculate a color-coded threat level doesn’t seem entirely practical. Suppose a person doesn’t use social media or own a house but was once arrested when he was 17 for possession of marijuana. The absence of data might lend itself to a high threat level. The same can be said for online meta data that might filter in extracurricular interests. Could a person who is interested in kinky activity in the bedroom be tagged as having a tendency toward violence?

The Fresno, Calif. police department is taking on the daunting task of being the first to test the software in the field. Understandably, the city council and citizens voiced their skepticism at a meeting. “One council member referred to a local media report saying that a woman’s threat level was elevated because she was tweeting about a card game titled ‘Rage,’ which could be a keyword in Beware’s assessment of social media,” the Post reported.

While you might now be rethinking playing that Mafia game on Facebook, it isn’t just your personal name that can raise a flag. Fresno Councilman Clinton Olivier, a libertarian-leaning Republican, asked for his name to be run through the system. He came up as a “green” which indicates he’s safe. When they ran his address, however, it popped up as “yellow” meaning the officer should beware and be prepared for a potentially dangerous situation. How could this be? Well, the councilman didn’t always live in this house; someone else lived there before him and that person was likely responsible for raising the threat score.

Think what a disastrous situation that could be. A mother of a toddler could move into a new home with her family, not knowing that the house was once the location of an abusive patriarch. The American Medical Association has calculated that as many as 1 in 3 women will be impacted by domestic violence in their lifetimes, so it isn’t an unreasonable hypothetical. One day the child eats one of those detergent pods and suddenly the toddler isn’t breathing. Hysterial, the mother calls 911, screaming. She can’t articulate what has happened, only that her baby is hurt. Dispatch sends an ambulance, but the address is flagged as “red” for its prior decade of domestic violence calls. First responders don’t know someone new has moved in. The woman is giving CPR while her husband waits at the door for the ambulance. What happens when the police arrive?

It’s a scenario that can be applied to just about any family and any situation. Moving into an apartment that previously was a marijuana grow-house; buying a house that once belonged to a woman who shot her husband when she found him with his mistress in the pool. Domestic violence calls are among the most dangerous for police officers. Giving police additional suspicion that may not be entirely accurate probably won’t reduce the incidents of of accidental shootings or police brutality.

The worst part, however, is that none of these questions and concerns can be answered, because Intrado, the company that makes Beware, doesn’t reveal how its algorithm works. Chances are slim that they ever will, since it would also be revealed to its competitors. There’s no way of knowing the accuracy level of the data set given in the search. Police are given red, yellow or green to help them make a life-changing or life-ending decision. It seems a little primitive, not to mention intrusive.

“It is deeply disturbing that local law enforcement agencies are unleashing the sophisticated tools of a surveillance state on the public with little, if any, oversight or accountability,” Ryan Kiesel of the Oklahoma ACLU told me. “We are in the middle of a consequential moment in which the government is unilaterally changing the power dynamic between themselves and the people they serve. If we are going to preserve the fundamental right of privacy, it is imperative that we demand these decisions are made as the result of a transparent and informed public debate.”

While mass shootings are on the rise, violent crime and homicides have fallen to historic lows. You wouldn’t know that watching the evening news, however. Is now really the time to increase the chances of violent actions at the hands of the police, all while intruding on our civil liberties under the guise of safety?

Your support matters…Independent journalism is under threat and overshadowed by heavily funded mainstream media.

You can help level the playing field. Become a member.

Your tax-deductible contribution keeps us digging beneath the headlines to give you thought-provoking, investigative reporting and analysis that unearths what's really happening- without compromise.

Give today to support our courageous, independent journalists.

You need to be a supporter to comment.

There are currently no responses to this article.

Be the first to respond.