Two Years Behind Bars or 20? One Day, a Computer Formula May Have a Say

Influenced by algorithms, judges who are racially biased might actually hand out milder sentences to minority defendants. But some experts worry that, in the big picture, an imperfect science may work against the accused. Maksim Kabakou / Shutterstock

Maksim Kabakou / Shutterstock

Maksim Kabakou / Shutterstock

Imagine this scene: A criminal is standing in the courtroom. He is waiting to hear the judge’s sentence and learn how the rest of his life will unfold. While he waits, complex algorithms are employed to analyze details of his case and decide his fate.

This could be the future—sentencing American citizens based on statistics.

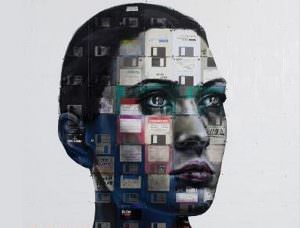

There’s already a significant movement in the United States to use predictive algorithms to sentence criminals. These algorithms analyze a person’s criminal record, age, gender, family background and other data to determine whether he or she is likely to reoffend after serving a specific period of time. Ideally, judges who may be biased will be less likely to give defendants longer sentences based on their personal feelings. But some worry that using algorithms could actually reinforce biases.

“By basing sentencing decisions on static factors and immutable characteristics—like the defendant’s education level, socioeconomic background or neighborhood—they may exacerbate unwarranted and unjust disparities that are already far too common in our criminal justice system and in our society,” said former Attorney General Eric Holder in a speech in August to the National Association of Criminal Defense Lawyers .

What Holder was getting at is that an algorithm that computes historical data on sentencing or information connected to race might reinforce the legacy of a criminal justice system known to be biased against minorities, resulting in inordinately long sentences. These same concerns are in play for algorithms used to predict what areas are likely to experience the most crime, or to determine a defendant’s bail or whether he or she should be paroled.

“Because these algorithms are using past data to build the algorithm, and past data is by definition skewed because of the history of racial discrimination in the U.S. and everywhere, the result is the algorithms are necessarily reproducing these inequalities,” Angèle Christin, a postdoctoral fellow at the Data & Society Research Institute, told Truthdig. “While of course race is never included in the statistical models that are being used to build these algorithms, you have many variables that can serve as proxies. For example, some models rely on ZIP codes or the criminal records of family members, which are significantly correlated with race.”

As a result, Christin explained, defendants could end up serving a longer sentence just because they live in an urban area with a high crime rate or have family members who have been convicted of crimes, both situations that are more common for minorities in America.

Algorithms have been employed in criminal justice for risk assessment and other uses for decades, but algorithm technology has become much more sophisticated, so courts are starting to take it more seriously, Christin said.

In addition to racial issues, some are concerned that the use of algorithms will result in unnecessarily harsh sentences in scenarios in which there’s a detail about the case for which the algorithm can’t account. This is one of the problems with mandatory minimum sentences, in cases where a judge would prefer to give someone a less harsh sentence because of extenuating circumstances but ultimately can’t do so.

However, Christin said, “The parallel with mandatory minimum sentencing and the sentencing guidelines is interesting, but the situation is different because risk-assessment tools do not provide binding recommendations.” As it stands, judges are not bound to follow algorithm-derived results, but if judges merely see the results of such technology there could be serious impacts on sentencing outcomes. Christin said many psychologists and sociologists have determined that the scientific nature of algorithm technology makes people more likely to follow algorithm-based recommendations. “When you have algorithms providing a score that has some kind of scientific legitimacy behind it to support it, you’re more likely to follow it,” she said.

Nazgol Ghandnoosh, a research analyst at The Sentencing Project, said she sees how algorithms could be useful. “We want to have a balance [with] some systematized decision making and at the same time we want criminal justice professionals to use their expertise to customize decisions, but we know if we just let that happen and don’t monitor it, that’s going to produce racially biased outcomes,” she said.

Because every factor can be customized and tracked when using algorithms, it’s possible to be keenly aware of how the sentence was decided, Ghandnoosh said. Judges, however, may weigh many factors in reaching a decision that others aren’t aware of. Ultimately, she said, “I think [algorithms are a] good step toward making decision-making more systematic, but there are a lot of challenges to figure out, like how to not reinforce and solidify racial disparities.”

According to Christin, the fact that algorithms can be tailored provides hope for algorithmic justice. She said statisticians and computer scientists are working on ideas for addressing racial bias. For instance, factors connected to race could simply be removed from an algorithm, although then it might be less accurate, because there would be far less data involved. Alternatively, constraints that suggest that white and minority offenders present equal levels of risk could be embedded in an algorithm. The problem with that idea is that it could raise the question of whether other constraints, such as those for age, wealth or gender, should have been included. Constraints such as these could change the effectiveness of algorithms in ways not yet fully understood.

Just looking at a defendant’s criminal record to decide a sentence could be racially biased, Ghandnoosh argues. “Criminal history measures criminal justice policies,” she said, adding that “people of color are more likely to be surveilled and arrested and convicted” for crimes, especially less serious ones. The fact that police departments tend to focus more on minorities means minorities are more likely to be arrested, which means members of these groups are more likely to have criminal records in the first place.

There are no foolproof, absolutely scientific ways to reduce sentencing biases, but it’s likely that algorithms will play a major part in criminal justice decisions in the near future. And when a formula is used to decide your fate, you’d better hope it’s a good one.

Your support matters…Independent journalism is under threat and overshadowed by heavily funded mainstream media.

You can help level the playing field. Become a member.

Your tax-deductible contribution keeps us digging beneath the headlines to give you thought-provoking, investigative reporting and analysis that unearths what's really happening- without compromise.

Give today to support our courageous, independent journalists.

You need to be a supporter to comment.

There are currently no responses to this article.

Be the first to respond.