How Our Worlds Are Decided for Us From Behind the Computational Curtain

On websites like Facebook, our selves are not more free; they are more owned. And they are owned because we are now made of data. New York University Press

New York University Press

The following excerpt is from “We Are Data: Algorithms and the Making of Our Digital Selves” (New York University Press) and is reprinted with permission of the publisher.

This, the ability to take real-world phenomena and make them something a microchip can understand, is, I think, the most important skill anyone can have this day. Like you use sentences to tell a story to a person, you use algorithms to tell a story to a computer. — Christian Rudder, founder of OkCupid

We are made of data. But we are really only made when that data is made useful. This configuration is best described by the technologist wet dream in the epigraph. For the cyberutopians who believe this stuff, we are a code, a Heideggerian standing reserve that actualizes us through technology. Or more appropriately, we are a rudimentary cyborgism laid bare by Microsoft’s rhetoric: inside us is a code; that code represents not us but our potential; and despite the code being inside of us and signifying our potential, it must unlocked by (Microsoft) technology.

Microsoft’s “Potential” recruiting video is wonderful because it so transparently celebrates a world where corporate profit goes hand in hand with digital technology, which goes hand in hand with capitalist globalization, which goes hand in hand with the colonization of our own futures by some super-intense neoliberal metaphor of code. One might ask, does this code ever rest? Does it have fun, go to a soccer match, or drink a glass of wine? Or is its only function to work/use Microsoft products? The code inside us seems like the kind of code who stays late at the office to the detriment of friendship, partnership, or possibly a child’s birthday.

Yet Microsoft’s overwrought voice-over copy superficially grazes the more substantial consequence of “inside us all there is a code.” If we are made of code, the world fundamentally changes. This codified interpretation of life is, at the level of our subjectivity, how the digital world is being organized. It is, at the level of knowledge, how that world is being defined. And it is, at the level of social relations, how that world is being connected. Of course, we don’t have to use Microsoft products to unleash our potential. That’s beside the point. But Microsoft’s iconic corporate monopoly works as a convenient stand-in to critique the role that technology has in not just representing us but functionally determining “who we might become.”

We Are Data: Algorithms and the Making of Our Digital Selves

Purchase in the Truthdig Bazaar

We Are Data: Algorithms and the Making of Our Digital Selves

Purchase in the Truthdig Bazaar

Representation plays second fiddle if Microsoft can quite literally rewrite the codes of race, class, gender, sexuality, citizenship, celebrity, and terrorist. Rewriting these codes transcodes their meaning onto Microsoft’s terms. They become the author of who ‘we’ are and can be.

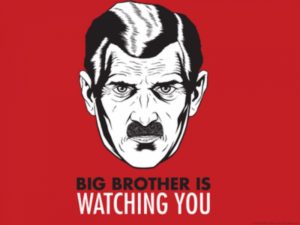

Asymmetry defines this type of emergent power relation. Power (be it state or capital) classifies us without querying us. And those classifications carry immense weight to determine who possesses the rights of a ‘citizen,’ what ‘race’ and ‘gender’ mean, and even who your ‘friends’ are. Indeed, the central argument of this book is that the world is no longer expressed in terms we can understand. This world has become datafied, algorithmically interpreted, and cybernetically reconfigured so that I can never say, “I am ‘John’” with full comprehension of what that means. As a concrete subjective agent, ‘John’ can’t really exist. However, ‘John’ can be controlled.

This apparent paradox fits with the famed doublethink slogan that defines George Orwell’s political dystopia of 1984: “who controls the past controls the future; who controls the present controls the past.” To control the present is to control everything that comes before as well as everything that happens after. So what happens when you don’t just control the present but also construct it? Novelist Zadie Smith supplies us an answer in her own review of the 2010 film about Facebook, The Social Network:

When a human being becomes a set of data on a website like Facebook, he or she is reduced. Everything shrinks. Individual character. Friendships. Language. Sensibility. In a way it’s a transcendent experience: we lose our bodies, our messy feelings, our desires, our fears. It reminds me that those of us who turn in disgust from what we consider an overinflated liberal-bourgeois sense of self should be careful what we wish for: our denuded networked selves don’t look more free, they just look more owned.

On Facebook, our selves are not more free; they are more owned. And they are owned because we are now made of data.

Let’s consider an example from Facebook, where researchers have reconceptualized the very idea of ‘inappropriate’ with a new feature that aims to introduce a bit more decorum into our digital lives. An automated “assistant” can evaluate your photos, videos, status updates, and social interactions, scanning them all for what Facebook thinks is ‘inappropriate’ content. When you post something that Facebook considers unbecoming, your algorithmic assistant will ask, “Uh, this is being posted publicly. Are you sure you want your boss and your mother to see this?”

That Facebook will know ‘inappropriate’ from ‘appropriate’ is beholden to the same presumption that Quantcast knows a ‘Caucasian,’ Google knows a ‘celebrity,’ and the NSA knows a ‘terrorist.’ They don’t. All of these are made up, constructed anew and rife with both error and ontological inconsistency. Yet despite this error, their capacity for control is impressively powerful. Facebook’s gentility assistant, if it ever gets implemented, will likely have more import in framing acceptable behavior than any etiquette book ever written has.

These algorithms may “unlock” your potential—or, in the case of Facebook, may curate your potential—but not in the way that Microsoft wants you to believe. Microsoft’s prose suggests you have some static DNA hard coded into your system that is just waiting to succeed in the marketplace. Trust me (and anyone who isn’t your parent), you don’t. What you do have is life, and one brief look at contemporary capitalism’s CV will demonstrate its terrifying competence to colonize that life with its own logic and motives. What these algorithms do “unlock” is the ability to make your life useful on terms productive for algorithms’ authors.

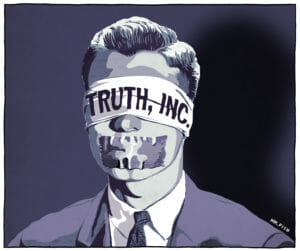

Imagine a world where a company like Google doesn’t just gatekeep the world’s information but also controls how we describe that world. This is the precise problem we encounter when discourse is privatized, although not the way people usually talk about the crisis of privatized knowledge. Sure, public libraries are losing funding, museums are closing, and even academic journals are pettily protecting copyright for the articles that scholars write for free. That’s one kind of privatization. But much like Google has the ability to make a ‘celebrity’ and Facebook to define ‘inappropriate,’ Google, Facebook, and others are on their way to privatizing “everything.”

Privacy and Privatization

By privatizing “everything,” I really mean everything. Love, friendship, criminality, citizenship, and even celebrity have all been datafied by algorithms we will rarely know about. These proprietary ideas about the world are not open for debate, made social and available to the public. They are assigned from behind a private enclosure, a discursive trebuchet that assails us with meaning like rabble outside the castle walls.

The consequence of this privatization means that privacy has become inverted, not in its definition but in its participants and circumstances. A site like OkCupid has privacy from us, but we don’t have privacy from OkCupid. We don’t know how our data is being used. We can’t peek inside its compatibility match algorithm. But every little thing we do can be watched and datafied.

Google’s algorithm orders the world’s knowledge and thus frames what is and is not ‘true,’ using our own search data and its catalogue of our web habits to improve its functioning. But the machinations of Google search will always be hidden, intellectual property that we can never, ever know. Google has no obligation to tell us how they make ‘us’ or the ‘world’ useful. But in order to use each site, via its terms of service, we must agree not to have privacy. We agree to let our datafied ‘us’ be mined and made meaningful according to the whims of site operators. Each site’s legal terms of service are the lived terms of life online.

In fact, via the terms of service for a site like Facebook, not only do we not have privacy, but our data (our photos, videos, even chats) doesn’t even belong to us. Data, more often than not, belongs to whoever holds it in the cloud. The semantic resemblance of privacy and privatization can be twisted together, a slippery neoliberal convergence by which the two concepts almost eagerly fold into one.

We can trace this neoliberal folding back to the Internet of the 1990s, around the time when AOL’s famous “terms of service” were king and the company served a deluge of “TOS violations” to software pirates and explicit-language users. AOL’s terms strictly forbid both proprietary software trading and unseemly discourse. The resulting corporate cyberspace wasn’t the unruly anarchist paradise feared/hoped for by some people but rather a highly controlled platform for “civility” and “security” that made AOL’s paying customers feel, well, civil and secure.

In a love-it-or-leave-it policy, any user who did not accept the stringent terms of AOL’s service was disallowed access. Anyone who accepted them but later sinned was eventually banned. Users became indebted to these terms as they prescribed exactly how the network could be used. And a crucial feature of those terms was that your datafied life belonged to AOL, not you. Owners of cyberspaces like AOL drafted their own terms in order to give them sovereignty over their digital space, and the resulting discursive sphere was soon governed by AOL’s legal code.

Quite predictably, this privatized cyberspace became business space, its business model became advertising, its advertising got targeted, and its targets were built with this possessed data. As communication scholar Christian Fuchs has detailed, the data gathered in these privatized spaces became the constant capital by which profit would be produced, echoing the most basic of capital relations: we legally agree to turn over our data to owners, those owners use that data for profit, and that profit lets a company gather even more data to make even more profit. So while the terms of cyberspace are defined by a company like AOL, the knowledges that make our datafied worlds are similarly owned. But beyond the fundamentals of capitalist exploitation, the baseline premise of data being ownable has a provocative ring to it. If we could mobilize and change Facebook’s terms of service to allow us true ownership over our Facebook profiles and data, then we could wrestle some control back. In this way, ownership is interpreted as a liberatory, privacy-enhancing move.

Tim Berners-Lee, inventor of the World Wide Web and leading voice against the commercialization of the Internet, believes our data belongs to you or me or whoever births it.11 In a way, our datafied lives are mere by-products of our mundane entrepreneurial spirits, a tradable commodity whose value and use we, and we alone, decide. One step further, computer scientist Jaron Lanier has argued for a reconceptualized economic infrastructure in which we not only own but ought to be paid for our data—that the profits reaped by the million /billionaires in the tech world should trickle down to those who make them their millions/ billions.

Whether owned by a corporation or ourselves, our data (and by default our privacy) finds comfortable accommodations in the swell of capitalist exchange. Indeed, Lanier’s approach reincorporates the costly externalities of privacy invasion back into the full social cost of dataveillance. But to frame privacy in terms of ownership locates the agent of privacy as some unique, bodied, named, and bank-accountable person. A dividual can’t receive a paycheck for her data-used-by-capital, but John Cheney-Lippold can.

This discussion eventually gets subsumed into a revolving circuit of critiques around what theorist Tiziana Terranova has coined “free labor.” The digital economy, as part of a capital-producing ecosystem, reconfigures how we conceive of work, compensation, and even production. If our datafied lives are made by our own actions, they order as well as represent a new kind of labor. You on OkCupid, diligently answering questions in the hope of romantic partnership, produce the knowledges that feed the site’s algorithmic operations. OkCupid’s value and profit margins are dependent on these knowledges.

This is where Italian autonomists have argued the “social factory” has relocated to, reframing the relation between capital and labor outside the factory walls and into the fabric of social life itself. By commodifying our every action, the social factory structures not just labor but life within the rubric of industry. This is now an unsurprising reality of our daily, networked existence. But requisite in such commodification is the reversal of privacy.

The reversal of privacy lets dataveilling agents make us useful without us knowing what that use is. I can’t find out what Google’s ‘gender’ looks like (I’ve tried), although I do know I am a ‘woman.’ And I can’t find out what Quantcast’s ‘ethnicity’ looks like (I’ve tried), but neither do I know what Quantcast thinks I am. The privatized realms of measurable-type construction are owned by the other, sometimes hidden, other times superficially shown, but almost never transparent in how knowledges about the world, the discourses through which we’re made subject, are created and modulated.

Microsoft’s code is much more than a metaphor. It’s an empirical, marketable commodity that defines the things we find important now— race, gender, class, sexuality, citizenship—as well as the things that we will find important in the future. When privacy is reversed, everything changes. Our worlds are decided for us, our present and futures dictated from behind the computational curtain.

John Cheney-Lippold is assistant professor of American culture at the University of Michigan.

Your support matters…Independent journalism is under threat and overshadowed by heavily funded mainstream media.

You can help level the playing field. Become a member.

Your tax-deductible contribution keeps us digging beneath the headlines to give you thought-provoking, investigative reporting and analysis that unearths what's really happening- without compromise.

Give today to support our courageous, independent journalists.

You need to be a supporter to comment.

There are currently no responses to this article.

Be the first to respond.