Go West, Weird Man

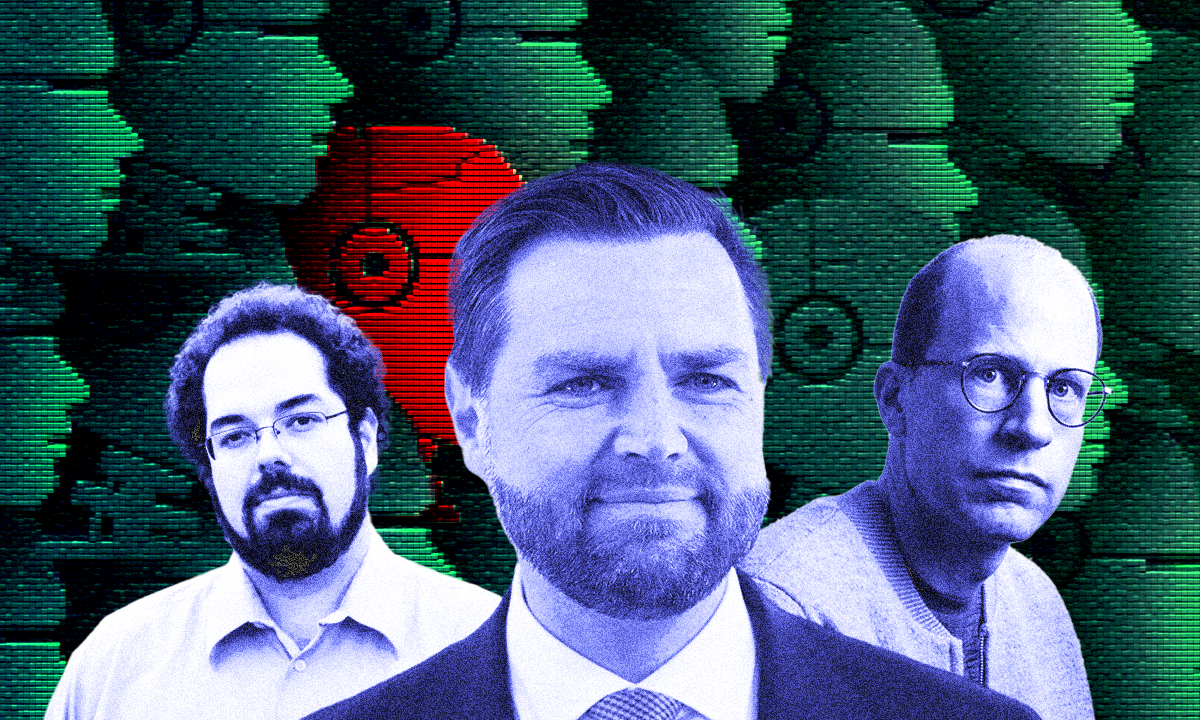

The oddballs of the MAGA movement have nothing on Silicon Valley transhumanists. Big Tech and it's 'weird' problem. Eliezer Yudkowsky, JD Vance and Nick Bostrom. Graphic by Truthdig. Images sourced via AP Images and Adobe Stock

Big Tech and it's 'weird' problem. Eliezer Yudkowsky, JD Vance and Nick Bostrom. Graphic by Truthdig. Images sourced via AP Images and Adobe Stock

The latest Democratic line of attack targeting Trump, his running mate JD Vance and the MAGA movement is to call them “weird.” Not the good “weird,” in the mold of David Bowie or Albert Einstein, but bad weird, in a way most Americans find profoundly creepy. Trump saying that he would be dating Ivanka if she weren’t his daughter, or Vance talking about a conspiracy of “childless cat ladies” — that kind of weird. Social media is overflowing with examples of MAGA followers and officials doing things like donning “Real Men Wear Diapers” shirts and amorously rubbing the nether regions of a Trump cutout.

Unlike Hillary Clinton’s “basket of deplorables” comment in 2016 — which backfired spectacularly — this effort to “bully the bullies” appears to be sticking, because the charge rings true, as noted by “The Daily Show’s” Ronny Chieng: “You can’t say, ‘Guys, guys, I’m not weird!’ Because that sounds weird.”

But the modern GOP does not have a monopoly on weird in American life. In Silicon Valley, a bizarre techno-utopian movement that supersedes the political spectrum is arguably the weirdest current in society. Together with Timnit Gebru, I have christened this movement “TESCREALism.” It is an admittedly clunky acronym for the following “-isms,” all of them very weird: transhumanism, extropianism, singularitarianism, cosmism, rationalism, effective altruism and longtermism.

I am intimately familiar with the weirdness of this movement, because I used to be a part of it. In 2017, before I realized that the eugenic fantasies at the heart of TESCREAL utopianism are irredeemably flawed, I was invited to present my work at a two-month-long workshop in Sweden. A number of noted TESCREALists were there, including Anders Sandberg, Robin Hanson and James Miller. One day, I noticed that Sandberg was wearing a dog tag. I asked him why, and he explained that it’s because he signed up with a cryonics company called Alcor, which cryogenically freezes dead bodies so that they can be resurrected at some future date, when the necessary technology becomes available. Asking around, I discovered that Sandberg wasn’t the only one: Hanson and Miller had also signed up (and later I found out that other leading figures like Nick Bostrom and Eliezer Yudkowsky have also signed up with cryonics companies to have themselves frozen if they die).

These TESCREAL ideologies are so strange that most people will naturally recoil when presented with an accurate picture of what they are.

Jokingly, I asked one of them, “If you wear a bike helmet when you’re on your bicycle, why wouldn’t you wear it when you’re, say, walking through the city? After all, resurrection depends on your brain not being damaged, so it seems odd that you’d protect yourself in one situation but not the other!” I meant this to be facetious. But my interlocutor replied that, in fact, they do wear a bike helmet in all such situations — not only when they’re walking through the city, or hiking through the woods, but when they’re driving their car.

Why take the risk when the stakes are immortality?

Several years ago, I was speaking with the editor of an influential leftist magazine about how we’re both critical of the TESCREAL ideologies. We chuckled when we agreed that one of the best ways to “critique” the TESCREAL movement is to simply describe what TESCREALists believe. These ideologies are so strange that most people will naturally recoil when presented with an accurate picture of what they are. Some ideas are so bonkers that they undermine themselves. From the goal of reengineering humanity to creating trillions of digital people in computer simulations to mass surveillance to warnings that AGI could synthesize “diamondoid bacteria” to gleeful claims that “intelligent machines” should take our place in the universe, this is a very odd set of worldviews. And not the “good” kind of “odd,” but the sort of “odd” that should make all of us fearful that the TESCREAL ideologies have become so pervasive within Silicon Valley — and among our Big Tech oligarchs, some of whom have amassed as much wealth as entire countries, such as New Zealand and Qatar.

You don’t need to understand each of the constituent ideologies to get the essence of this techno-utopian vision of the world. What’s most important is the “T,” which stands for “transhumanism,” the backbone of the TESCREAL bundle from which all the other ideologies emerged. Transhumanism is a variant of traditional eugenics that instructs us to develop advanced technologies so that we can radically reengineer ourselves, thus creating a “superior” new “posthuman” species that might eventually take our place.

Transhumanism is everywhere in Silicon Valley — in many ways, it’s the air that Valley-dwellers breathe, the water in which they swim. It’s why Elon Musk’s company Neuralink is trying to merge our brains with AI, and it’s consistent with SpaceX’s aim to “extend” what Musk calls the “light of consciousness” into the universe. It’s also a major reason that billion-dollar companies such as DeepMind, OpenAI, Anthropic and xAI are trying to build the first artificial general intelligence, or AGI. By creating human-level or superhuman AGIs, we could vastly increase our chances of fulfilling the transhumanist project of becoming a new species of immortal, hyper-rational posthuman beings.

Let’s take a look at this techno-utopian vision and some of the people who’ve been instrumental in shaping it over the past 30 years or so, beginning with Nick Bostrom — one of the most influential transhumanists today and a cofounder of the “L” in “TESCREAL,” which stands for longtermism. It’s hard to pick the “weirdest” idea that Bostrom has championed because there are so many to choose from. For example, consider his claim that we should seriously consider implementing a global surveillance system that monitors every minute action of everyone in the world. To illustrate the proposal, he imagines you and I being “fitted with a ‘freedom tag,’” a kind of wearable surveillance device like “the ankle tag used in several countries as a prison alternative, the bodycams worn by many police forces, the pocket trackers and wristbands that some parents use to keep track of their children,” and so on.

What’s the point of this? Bostrom reasons that if “we” were to create technologies that enable a large number of people to unilaterally destroy civilization, we might prevent this from happening via a “high-tech panopticon” that watches everyone, everywhere, every second of the day. In a subsequent TED talk Q&A, Bostrom makes it clear that he’s serious about this proposal, and in fact he published the article introducing the idea in a well-respected policy journal called “Global Policy.”

But, of course, who exactly would want to live in a world with zero privacy? Maybe we shouldn’t try to develop such dangerous technologies in the first place? To this, Bostrom and his TESCREAL colleagues would strongly object, because these very same technologies may also be necessary to create the techno-utopian fantasyland imagined by TESCREALists like him. Though creating these technologies might cause our extinction, if we never create them, we’ll definitely never get utopia. The question is thus how to create them without this backfiring — and Bostrom’s answer is: Maybe mass surveillance is the solution!

Why does it matter whether we go extinct or not? The obvious answer is that going extinct would likely cause enormous amounts of human suffering. If some omnicidal psychopath were to synthesize a designer pathogen and release it around the world, 8 billion people could die extremely painful deaths.

However, TESCREALists like Bostrom don’t think that this is the worst part of human extinction. For them, the worst part would be all the “lost value” that could have otherwise existed in the future — millions, billions and trillions of years from now. On this account, which is very popular within the TESCREAL movement, our ultimate aim should be to not only remake ourselves into “posthuman” beings, but to colonize as much of the accessible universe as possible. Once we’ve spread to other solar systems and galaxies, Bostrom argues that we should build literally “planet-sized” computers on which to run huge virtual-reality worlds full of trillions and trillions of “digital people.” The loss of these people is the real cost of human extinction — an idea that could be summarized as: “Won’t someone think of all the digital unborn?” According to an estimate from the TESCREAList Toby Newberry, there could be around 10^45 digital people — that’s a one followed by 45 zeros — in the Milky Way galaxy per century, while Bostrom himself calculates at least 10^58 digital people in the universe as a whole. That number is way bigger than the total human population today, which is what makes the future much more important than the present, according to them.

There are two views about AGI among TESCREALists: so-called “doomers” think that building a “safe” AGI is going to be really difficult.

This is the ultimate goal of many TESCREALists: to realize this “vast and glorious” future as digital posthumans among the heavens — to quote another leading TESCREAList. That leads us back to AGI, or what the CEO of OpenAI, Sam Altman, calls the “magic intelligence in the sky.” The TESCREAL movement sees AGI as probably the single most important invention ever — not just in human history, but quite possibly in cosmic history. Why is this? Because, on the one hand, if we create an AGI that is “unsafe,” it will by default kill everyone on Earth, thereby destroying the “glorious transhuman future” that awaits us. On the other hand, if we create an AGI that is “safe,” it will give us everything that “we” want: radical abundance, immortality and space colonization. AGI is the ultimate double-edged sword.

There are two views about AGI among TESCREALists: so-called “doomers” think that building a “safe” AGI is going to be really difficult. As Yudkowsky, the most famous doomer, writes in Time magazine: “The most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die.” In contrast, “accelerationists” maintain that the most probable outcome will be paradise. Both positions are very weird in their own particular ways.

Consider first the doomers. How do they believe that AGI could kill everyone on Earth? Yudkowsky argues that the AGI could build currently nonexistent, hypothetical new technologies like nanotechnology. It could then use nanotechnology to synthesize what he calls “diamondoid bacteria” that “replicate with solar power and atmospheric [chemical elements], maybe aggregate into some miniature rockets or jets so they can ride the jetstream to spread across the Earth’s atmosphere, get into human bloodstreams and hide.” This is scary, Yudkowsky tells us, because “at a certain clock tick, everybody on Earth [could fall] over dead in the same moment.”

To be clear, “diamondoid bacteria” do not exist. They are science fiction. But Yudkowsky is extremely worried that they’re exactly the sort of fantastical thing that a superintelligent AGI might create to murder the entire human population. This scenario is so far removed from reality that it’s a wonder people take it seriously — yet a large number of people within the TESCREAL movement do take it seriously. Yudkowsky was even asked to give a TED talk on the topic after publishing his Time magazine article.

Bostrom’s “doomer” views are no less peculiar. In his 2014 bestseller Superintelligence, which was promoted by Musk, he argues that AGI could build “nanofactories.” Once built, the AGI could hatch a plan to use these nanofactories to produce “nerve gas or target-seeking mosquito-like robots” that suddenly “burgeon forth simultaneously from every square meter of the globe.” In a flash, the AGI would then eradicate the whole human species amid a swarm of lethal buzzing robotic insects. Bostrom claims that the “default outcome” of an “unsafe” AGI will be the extinction of humanity and, consequently, the permanent erasure of all the unborn digital people who could have otherwise existed in massive computer simulations spread throughout the cosmos — where the loss of these unborn people would be, once again, by far the worst part of our untimely extinction.

The accelerationist wing of the TESCREAL movement is more sanguine about AGI, but no less strange. In fact, some are explicit that “intelligent machines” should replace humanity, and that we ought not resist this transition to a new age in which machines rule the world. For example, the billionaire cofounder of Google, Larry Page, argues that “digital life is the natural and desirable next step in … cosmic evolution and that if we let digital minds be free rather than try to stop or enslave them, the outcome is almost certain to be good.” Or consider Guillaume Verdon, who goes by the name “Beff Jezos” on X. When he was asked whether preserving the “light of consciousness” requires humanity to stick around, he said no, adding that he’s “personally working on transducing the light of consciousness to inorganic matter,” by which he means computer hardware. Yet another example comes from Hans Moravec, a Carnegie Mellon University roboticist who has been hugely influential in the development of TESCREALism. In one article, he describes himself as “an author who cheerfully concludes that the human race is in its last century, and goes on to suggest how to help the process along.”

These grandiose visions of the future would be laughable if they weren’t so ubiquitous within the tech world. Bostrom, Yudkowsky and the others aren’t fringe figures, but among the most prominent members of the TESCREAL movement, which has shaped research programs and launched major Silicon Valley companies that are changing our world in both subtle and profound ways. As Gebru and I argue, all of the leading AI companies — DeepMind, OpenAI, Anthropic and xAI, which have AGI as their explicit goal — directly emerged out of the TESCREAL movement.

These grandiose visions of the future would be laughable if they weren’t so ubiquitous within the tech world.

Why are these companies trying to build AGI if some leading TESCREALists believe it’s so dangerous? Altman admits that “AI will … most likely sort of lead to the end of the world,” and Musk, who founded xAI after cofounding OpenAI with Altman, affirms that “the danger of AI is much greater than the danger of nuclear warheads by a lot.” How does this make sense?

On the one hand, the AI doomers in these companies want to build AGI because they’re worried that other, less “safety-minded” companies might reach the AGI finish line first, which could be catastrophic for humanity and our long-term future in the cosmos. Doomerism inadvertently reinforces the arms-race dynamics of everyone rushing to reach the AGI finish line, because each group believes the others are less responsible than they are.

On the other hand, there are also many accelerationists in such companies who don’t think the risk of AGI killing everyone is very high. They typically add that whatever risks there might be are best solved by the free market, so we should not only accelerate the rate of “progress” toward AGI but open-source everything to ensure that any bad AGIs are countered by an even larger number of good AGIs. This idea can be sloganized, in NRA fashion, as: “The only thing that can stop a bad AGI is a good AGI.” Some accelerationists, such as the billionaire Marc Andreessen, even argue that slowing down AGI research “will cost lives” because it will deprive humanity of the utopian benefits that would otherwise have obtained, and hence that people who impede this “progress” are quite literally no better than murderers.

Although I do not know exactly what Vance believes, he got his start through Silicon Valley, one of the two birthplaces of TESCREALism (the other being Oxford in the United Kingdom). I have no doubt that Musk, Peter Thiel and others pressured Trump to select Vance in part because of that connection: a Trump-Vance win would probably mean far fewer regulations on tech, which means more wealth in the pockets of our Big Tech oligarchs. And more wealth in the pockets of these tyrannical dictators of capitalism, in turn, means more power to ensure the realization of the techno-utopian visions that are driving some of the most powerful corners of the tech industry — including the race to build AGI.

Though Trump and Vance have said some very weird things, the future that our TESCREAL overlords are pushing may be even weirder. It’s very important for Democratic leaders and voters alike to understand this: These people are serious when they talk about merging our brains with AI, spreading into space, and creating huge virtual-reality worlds like the “metaverse” was supposed to be. In 2022, Musk even shared a link to the article by Bostrom in which he argues that we should populate the universe with as many digital people as possible — a post that included the line, “Likely the most important paper ever written” — while Altman similarly declared that “I do not believe we can colonize space without AGI.” Though views differ about the riskiness of AGI in the near term, the ultimate vision is the same, and AGI is an integral part of realizing that vision.

As Kamala Harris emphasizes that her campaign is “focused on the future,” whereas “Donald Trump’s is focused on the past,” we must not forget that many of the tech billionaires promoting Trump are also focused on the future. Most of us would agree, though, that creating this extremely weird, sci-fi future world full of digital beings in other galaxies is not worth destroying the present, which is what a second Trump presidency would do.

Your support is crucial...As we navigate an uncertain 2025, with a new administration questioning press freedoms, the risks are clear: our ability to report freely is under threat.

Your tax-deductible donation enables us to dig deeper, delivering fearless investigative reporting and analysis that exposes the reality beneath the headlines — without compromise.

Now is the time to take action. Stand with our courageous journalists. Donate today to protect a free press, uphold democracy and uncover the stories that need to be told.

You need to be a supporter to comment.

There are currently no responses to this article.

Be the first to respond.