The Eternal Recurrence of Human Hubris

The debate over whether AI can truly "think" must begin by acknowledging the continuing mystery of human cognition. Image: Adobe

Image: Adobe

Editors note: The following story is co-published with Freddie deBoer’s Substack.

In the waning years of the 1800s, during the late Victorian era, some came to believe that human knowledge would soon be perfected. This notion was not entirely unjustified. Science had leapt great bounds in a few decades, medicine was finally being researched and practiced in line with evidence, industry had dramatically expanded productive capacity, mechanized transportation had opened the world. Humanity was on the move.

This progress and the confidence that attended it were perhaps most evident in physics; essential concepts in electromagnetism, thermodynamics, and mechanics had transformed the field. In a few short decades, the macroscopic workings of the physical world had been mapped with incredible precision, moving science from a quasi-mystical pursuit of vague eternal truths to a ruthless machine for making accurate predictions about everything that could be observed. This rapid progress had not gone unnoticed by those within the field. Though there were certainly many who felt otherwise, some giants within physics could see the completion of their project in the near distance. In an 1899 lecture the famed physicist Albert A. Michelson was confident enough to say that “the more important fundamental laws and facts of physical science have all been discovered, and these are now so firmly established that the possibility of their ever being supplanted in consequence of new discoveries is exceedingly remote.” Twenty years earlier, his German contemporary Johann Philipp Gustav von Jolly had told a young Max Planck “in this field, almost everything is already discovered, and all that remains is to fill a few unimportant holes.” However more cautious their peers may have been, this basic notion – that physics had more or less been solved by the late Victorian era – reflected a real current of opinion.

In biology, too, the development of evolution as a scientific theory was hailed as something like the completion of a major part of the human project; the notion of Darwinian ideas as the skeleton key to the science of life became widespread once evolution was generally accepted as true. Of course there was always more work to be done, but this was another broad scientific concept that felt less like an iterative step in a long march and more like reaching the summit of a mountain. Even better, while Charles Darwin’s On the Origin of Species had not commented on human social issues, his theories were quickly drafted into that effort. “Many Victorians recognised in evolutionary thinking a vision of the world that seemed to fit their own social experience,” the scholar of the Victorian era Carolyn Burdett has written. “Darwin’s theory of biological evolution was a powerful way to describe Britain’s competitive capitalist economy in which some people became enormously wealthy and others struggled amidst the direst poverty.” Thus advances in science increasingly served as a moral backstop for the sprawling inequality of Victorian society.

By the end of the Victorian era the philosopher and polymath Bertrand Russell was busily mapping the most basic elements of mathematics and tying them to formal logic. The possibility of digital computing had been demonstrated, as electrical switching circuits had been used to express basic logical operations. If some fields were described as essentially solved, and others as in their infancy, both suggested that humanity had taken some sort of epochal step forward, that we were leaving past ways of life behind.

The individual was withering because the imposition of progress had grown more and more inescapable.

This sense that the human race had advanced to the precipice of a new world was not merely the purview of scientists. Poetry signaled in the direction of a new era as well, though as is common with poets, they were more ambivalent about it. On the optimistic side, in “The Old Astronomer to His Pupil,” Sarah Williams imagined a meeting with the 16th-century astronomer Tyco Brahe, writing “He may know the law of all things, yet be ignorant of how/We are working to completion, working on from then to now.” She writes confidently that her soul will in time “rise in perfect light,” much like the progress of science. Alfred Lord Tennyson, the consummate Victorian poet, was more gloomy. He had predicted the utopian outlook in his early work “Locksley Hall,” published in the first five years of Queen Victoria’s reign. The poem finds a lovelorn man searching for a cure for his sadness and imagining the next stage of human evolution. He feels resigned to inhabit a world on the brink of epochal change. He describes this evolution in saying “the individual withers, and the world is more and more.” This gloomy line is certainly pessimistic, but as the editors of Poetry Magazine once wrote, it reflects “that expanding, stifling ‘world’ [which] saw innumerable advances in the natural and social sciences.” The individual was withering because the imposition of progress had grown more and more inescapable.

The late Victorian period also saw the British empire nearing its zenith, and the British empire thought of itself as coterminous with civilization; for the people empowered to define progress, back then, progress was naturally white, and specifically British. The subjugation of so much of the world, the fact that the sun never set on the British Empire, was perceived to be a civilizing tendency that would bring the backwards countries of the world within the penumbra of all of this human advancement. The community of nations itself seemed to be bent towards the Victorian insistence on its own triumph, never mind that only one nation was doing the bending. Queen Victoria’s diamond jubilee in 1897 provoked long lists of the accomplishments of the empire, including the steady path toward democracy that would leave her successors as little more than figureheads.

And then the 20th century happened.

Those familiar with the history of science will note the irony of Michelson’s confident statement. It was the Michelson-Morley experiment, undertaken with peer Edward Morley, that famously demonstrated the non-existence of luminiferous aether, the substance through which light waves supposedly traveled. That non-existence revealed a hole in the heart of physics; among other things, light’s ability to travel without a medium helped demonstrate its dual identity as a particle and a wave, a status once unthinkable. And it was the mysteries posed by that experiment that led Albert Einstein to special relativity, which when revealed in 1905 undermined those fundamental laws and facts that Michelson had so recently seen as unshakable. The Newtonian physics that had governed our understanding of mechanics still produced accurate results, in the macro world, but our understanding of the underlying geometry of the universe they existed in was changed forever. It turned out that what some had thought to be the culmination of physics was the last gasp of a terminally-ill paradigm.

This was, ultimately, a happy ending, a story of scientific progress. But I’m afraid the broader history of what followed Victorian triumphalism is not so cheery. In the first half-century of the 20th century, humanity endured two world wars, both of which leveraged technological advances to produce unthinkable amounts of bloodshed; the efforts of the Nazis to exterminate Jews and other undesirables, justified through a grim parody of Darwin’s theories; a pandemic of unprecedented scale, which spread so far and fast in part because of a world that grew smaller by the day; the Great Depression, made possible in part through new developments in financial machinery and new frontiers of greed; and the divvying up of the world into two antagonistic nuclear-armed blocs, each founded on moral philosophies that their adherents saw as impregnable and each very willing to slaughter innocents for a modicum of influence.

In every case, the world of ideas that had so recently seemed to promise a utopian age had instead been complicit in unthinkable suffering. Nazism, famously, arose in a country that some in the late Victorian era would have said was the world’s most advanced, and Adolf Hitler’s regime was buttressed not just by eugenics and a fraudulent history of the Jews but by poems and symphonies, the art of the Volkisch as well as the science of the V2 rocket. Intellectual progress had led to death in ways both intentional and not; you might find it hard to blame the advance of progress for the Spanish flu, but then the rise of intercontinental railroads and ships powered by fossil fuels was key to its spread.

It turned out that what some had thought to be the culmination of physics was the last gasp of a terminally-ill paradigm.

Amidst all this chaos, the world of the mind splintered too. The modern period (as in the period of Modernism, not the contemporary era) is famously associated with a collapse of meaning and the demise of truths once thought to be certain. In the visual arts, painters and sculptors ran from the direct depiction of visual reality, where the Impressionists and Expressionists who preceded them had only gingerly leaned away; the result was artists like Mark Rothko and Jackson Pollock, notorious for leaving viewers befuddled. In literature, writers like Virginia Woolf and James Joyce cheerfully broke the rules internal to their own novels whenever it pleased them or, at an extreme as in Finnegan’s Wake, never bothered to establish them in the first place. Philosophers like Ludwig Wittgenstein dutifully eviscerated the attempts of the previous generation, like those of Russell, to force the world into a structure that was convenient for human uses; in mathematics, Kurt Gödel did the same. The nostrums of common sense were dying in droves, and nobody was saying that the world had been figured out anymore.

Meanwhile, in physics, quantum mechanics would become famous for its dogged refusal to conform to our intuitive understandings of how the universe worked, derived from the experience of living in the macroscopic world. Advanced physics had long been hard to comprehend, but now its high priests were saying openly that it defied understanding. “Those who are not shocked when they first come across quantum theory cannot possibly have understood it,” said quantum mechanics titan Niels Bohr. He was quoted as such in a book by Werner Heisenberg, whose uncertainty principle had established profound limitations on what was knowable, in the most elementary foundations of matter. Worse still, Einstein’s 1915 theory of general relativity proved to offer remarkable predictive power when it came to gravity – and could not be reconciled with quantum mechanics, which was also proving to produce unparalleled accuracy in its own predictions about celestial mechanics. They remain unreconciled to this day. Michelson and von Jolly had misapprehended their moment as a completion of the project of physics, a few decades before their descendants would identify a fissure within it that no scientist has been able to suture.

And out in the broader world, all of us would come to live in the shadow of the Holocaust.

I’m telling you all of this to establish a principle: don’t trust people who believe that they have arrived at the end of time, or at its culmination, or that they exist outside of it. Never believe anyone who thinks that they are looking at the world from outside of the world, at history from outside of history.

It would be an exaggeration to suggest that the scientists and engineers behind the seminal 1956 Dartmouth conference on artificial intelligence believed that they could quickly create a virtual mind with human-like characteristics, but not as much of an exaggeration as you’d think. The drafters of the paper that called for convening the conference did believe that a “two-month, ten-man study” could result in a “significant advance” in domains like problem-solving, abstract reasoning, language use, and self-improvement. Diving deeper into that document and researching the conference, you’ll be struck by the spirit of optimism and the confidence that these were ultimately mundane problems, if not easy ones. They really believed that AI was achievable in an era in which many computing tasks were still carried out by machines that used paper punch cards to store data.

To give you a sense of the state of computing at the time, that year saw the release of the influential Bendix G-15. One of the first personal computers, the Bendix used tape cartridges that could contain about 10 kilobytes of memory, or 1.5625 × 10-7 the space of the smallest-capacity iPhone on the market today. The mighty Manchester Atlas supercomputer would not become commercially available for six more years. On its release, in 1962, the first Atlas was said to house half of the computing power in the entire United Kingdom; its users enjoyed the equivalent of 96 kilobytes of RAM, or less than some lamps you can buy at IKEAs.

Needless to say, these issues ultimately required more computing power than was available in that era, to say nothing of time, money, and manpower. In the seven decades since that conference, the history of artificial intelligence has largely been one of false hopes and progress that seemed tantalizingly close but always skittered just out of the grasp of the computer scientists who pursued it. The old joke, which updated itself as the years dragged on, was that AI had been 10 years away for 20 years, then 30, then 40….

Never believe anyone who thinks that they are looking at the world from outside of the world, at history from outside of history.

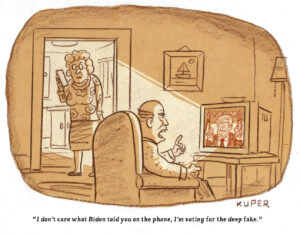

Ah, but now. I hardly need to introduce anyone to the notion that we’re witnessing an AI renaissance. The past year or so has seen the unveiling of a great number of powerful systems that have captured the public’s imagination – Open AI’s GPT-3 and GPT-4, and their ChatGPT offshoot; automated image generators like Dall-E and Midjourney; advanced web search engines like Microsoft’s new Bing; and sundry other systems whose ultimate uses are still unclear, such as Google’s Bard system. These remarkable feats of engineering have delighted millions of users and prompted a tidal wave of commentary that rivals the election of Donald Trump in sheer mass of opinion. I wouldn’t know where to begin to summarize this reaction, other than to say that as in the Victorian era almost everyone seems sure that something epochal has happened. Suffice it to say that Google CEO Sundar Pichai’s pronouncement that advances in artificial intelligence will prove more profound than the discovery of fire or electricity was not at all exceptional in the current atmosphere. Ross Douthat of the New York Times summarized the new thinking in saying that “the A.I. revolution represents a fundamental break with Enlightenment science,” which of course implies a fundamental break with the reality Enlightenment science describes. It appears that, in a certain sense, AI enthusiasts want their projections for AI to be both science and fiction.

In the background of this hype, there have been quiet murmurs that perhaps the moment is not so world-altering as most seem to think. A few have suggested that, maybe, the foundations of heaven remain unshaken. AI skepticism is about as old as the pursuit of AI. Critics like Douglas Hofstadter, Noam Chomsky, and Peter Kassan have stridently insisted for years that the approaches that most computer scientists were taking in the pursuit of AI were fundamentally flawed. These critics have tended to focus on the gap between what we know of beings that think, notably humans, what we know of how that thinking occurs, and the processes that underlie modern AI-like systems.

One major issue is that most or all of the major AI models developed today are based on the same essential approach, machine learning and “neural networks,” which are not similar to our own minds, which were built by evolution. From what we know, these are machine language systems that leverage the harvesting of impossible amounts of information to iteratively self-develop internal models that can extract answers to prompts that are statistically likely to satisfy those prompts. I say “from what we know” because the actual algorithms and processes that make these systems work are tightly guarded industry secrets. (OpenAI, it turns out, is not especially open.) But the best information suggests that they’re developed by mining unfathomably vast datasets, assessing that data through sets of parameters that are also bigger than I can imagine, and then algorithmically developing responses. They are not repositories of information; they are self-iterating response-generators that learn, in their own way, from repositories of information.

Crucially, the major competitors are (again, as far as we know) unsupervised models – they don’t require a human being to encode the data they take in, which makes them far more flexible and potentially more powerful than older systems. But what is returned, fundamentally, is not the product of a deliberate process of stepwise reasoning like a human might utilize but a vestige of trial and error, self-correction, and predictive response. This has consequences.

A two-year-old can walk down a street with far greater understanding of the immediate environment than an autodriving Tesla, without billions of dollars spent, teams of engineers, and reams of training data.

If you use Google’s MusicLM to generate music based on the prompt “upbeat techno,” you will indeed get music that sounds like upbeat techno. But what the system returns to you does not just sound like techno in the human understanding of a genre but sounds like all of techno – through some unfathomably complex process, it’s producing something like the aggregate or average of extant techno music, or at least an immense sample of it. This naturally satisfies most definitions of techno music. The trouble, among other things, is that no human being could ever listen to as much music as was likely fed into major music-producing AI systems, calling into question how alike this process is to human songwriting. Nor is it clear if something really new could ever be produced in this way. After all, true creativity begins precisely where influence ends.

The very fact that these models derive their outputs from huge datasets suggests that those outputs will always be derivative, middle-of-the-road, an average of averages. Personally, I find that conversation with ChatGPT is a remarkably polished and effective simulation of talking to the most boring person I’ve ever met. How could it be otherwise? When your models are basing their facsimiles of human creative production on more data than any human individual has ever processed in the history of the world, you’re ensuring that what’s returned feels generic. If I asked an aspiring filmmaker who their biggest influences were and they answered “every filmmaker who has ever lived,” I wouldn’t assume they were a budding auteur. I would assume that their work was lifeless and drab and unworthy of my time.

Part of the lurking issue here is the possibility that these systems, as capable as they are, might prove immensely powerful up to a certain point, and then suddenly hit a hard stop, a limit on what this kind of technology can do. The AI giant Peter Norvig, who used to serve as a research director for Google, suggested in a popular AI textbook that progress in this field can often be asymptotic – a given project might proceed busily in the right direction but ultimately prove unable to close the gap towards true success. These systems have been made more useful and impressive by throwing more data and more parameters at them. Whether generational leaps can be made without an accompanying leap in cognitive science remains to be seen.

Core to complaints about the claim that these systems constitute human-like artificial intelligence is the fact that human minds operate on far smaller amounts of information. The human mind is not “a lumbering statistical engine for pattern matching, gorging on hundreds of terabytes of data and extrapolating the most likely conversational response or most probable answer to a scientific question,” as Chomsky, Ian Roberts, and Jeffrey Watumull argued earlier this year. The mind is rule-bound, and those rules are present before we are old enough to have assembled a great amount of data. Indeed, this observation, “the poverty of the stimulus” – that the information a young child has been exposed to cannot explain that child’s cognitive capabilities – is one of the foundational tenets of modern linguistics. A two-year-old can walk down a street with far greater understanding of the immediate environment than an autodriving Tesla, without billions of dollars spent, teams of engineers, and reams of training data.

In Nicaragua, in the 1980s, a few hundred deaf children in government schools developed Nicaraguan sign language. Against the will of the adults who supervised them, they created a new language, despite the fact that they were all linguistically deprived, most came from poor backgrounds, and some had developmental and cognitive disabilities. A human grammar is an impossibly complex system, to the point that one could argue that we’ve never fully mapped any. And yet these children spontaneously generated a functioning human grammar. That is the power of the human brain, and it’s that power that AI advocates routinely dismiss – that they have to dismiss, are bent on dismissing. To acknowledge that power would make them seem less godlike, which appears to me to be the point of all of this.

True creativity begins precisely where influence ends.

The broader question is whether anything but an organic brain can think like an organic brain does. Our continuing ignorance regarding even basic questions of cognition hampers this debate. Sometimes this ignorance is leveraged against strong AI claims, but sometimes in favor; we can’t really be sure that machine learning systems don’t think the same way as human minds because we don’t know how human minds think. But it’s worth noting why cognitive science has struggled for so many centuries to comprehend how thinking works: because thinking arose from almost 4 billion years of evolution. The iterative processes of natural selection have had 80 percent of the history of this planet to develop a system that can comprehend everything found in the world, including itself. There are 100 trillion synaptic connections in a human brain. Is it really that hard to believe that we might not have duplicated its capabilities in 70 years of trying, in an entirely different material form?

“The attendees at the 1956 Dartmouth conference shared a common defining belief, namely that the act of thinking is not something unique either to humans or indeed even biological beings,” Jørgen Veisdal of the Norwegian University of Science and Technology has written. “Rather, they believed that computation is a formally deducible phenomenon which can be understood in a scientific way and that the best nonhuman instrument for doing so is the digital computer.” Thus the most essential and axiomatic belief in artificial intelligence, and potentially the most wrongheaded, was baked into the field from its very inception.

I will happily say: these new tools are remarkable achievements. When matched to the right task, they have the potential to be immensely useful, transformative. As many have said, there is the possibility that they could render many jobs obsolete, and perhaps lead to the creation of new ones. They’re also fun to play with. That they’re powerful technologies is not in question. What is worth questioning is why all of that praise is not sufficient, why the response to this new moment in AI has proven to be so overheated. These tools are triumphs of engineering; they are ordinary human tools, but potentially very effective ones. Why do so many find that unsatisfying? Why do they demand more?

You can read the rest of this piece on Freddie deBoer’s Substack.

Your support matters…Independent journalism is under threat and overshadowed by heavily funded mainstream media.

You can help level the playing field. Become a member.

Your tax-deductible contribution keeps us digging beneath the headlines to give you thought-provoking, investigative reporting and analysis that unearths what's really happening- without compromise.

Give today to support our courageous, independent journalists.

You need to be a supporter to comment.

There are currently no responses to this article.

Be the first to respond.