‘Threat-Score Software’: Will It Get You Shot by a Cop?

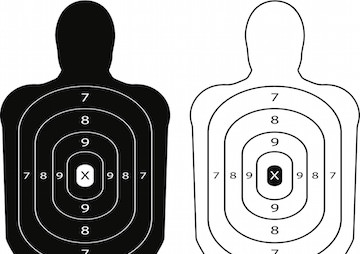

This law enforcement technology can use facts such as ZIP codes and frequency of address changes to assign a danger level to people an officer might engage A high threat score could increase the officer's anxiety and you know where that could lead. marinjez / Shutterstock

marinjez / Shutterstock

I recently wrote about how using algorithms to determine prison sentences could further entrench systematic racism. This is a concern when factors such as one’s ZIP code or a family member’s criminal record—which could correlate with an offender’s race—are used to decide how the justice system handles crime. A new technology that assigns “threat scores” to people so police can determine whether they’re dangerous raises similar issues.

This technology crunches a number of factors to rank how dangerous a person is. Police can access this score and prepare accordingly before engaging with him or her. A version of this technology is currently being piloted in Fresno, Calif., and other parts of the country.

Police departments have been secretive about whether they use such technology, but we know that threat-score software called Beware has been made by a company called Intrado. Intrado has not released details about how it works, but it’s likely that factors that determine a person’s score include social media activity, arrest reports, property records and other data. As I recently reported, where someone lives or whether he or she has been to jail before can sometimes be linked to racial or ethnic background.

Intrado would not answer specific questions for Truthdig, but it did send a statement. “Beware is a tool built to help public safety agencies inform first responders about the environment they may encounter when responding to a 9-1-1 call. When a first responder receives a request for assistance during a 9-1-1 event, they have a short window of time to collect information. Beware works to quickly provide them with commercially available, public information that may be relevant to the situation and may give them a greater level of awareness,” the statement said.

However benevolent the intentions of the company, the consequences of using the technology for police work may be detrimental. “I would hope that nobody would specifically involve race in a system or formula [like Beware], but there are many things that correlate with race,” said Jay Stanley, senior policy analyst with the American Civil Liberty Union’s Project on Speech, Privacy, and Technology. “Not just things like ZIP codes and things that are obvious, but other things, like how often you move. Studies show that minorities move more often than white people.”

Stanley said he’s also concerned that unfair concepts such as guilt by association could contribute to threat scores, resulting in someone being wrongly considered dangerous just because he or she has a friend with a criminal record. He points out that a police officer who believes someone poses a danger will treat that person differently than someone the officer considers an upstanding citizen. “Some individuals will unfairly be facing police officers who are coming into the encounter expecting the absolute worst of them and [are] much more ready to use force,” Stanley said.

This is exactly the type of scenario that could result in wrongful shootings by police officers—and such incidents could be legally supported if the responsible officer could point to a high threat score as justification, Stanley noted.

Guilt by association could go well beyond what one would imagine. For instance, a person could be considered guilty by association with someone he or she has never met. As others have pointed out, for example, it’s possible that a threat score that factors in property records could determine that a current resident is high risk simply because a previous tenant was considered high risk.

This technology raises other red flags, especially about privacy, said Stanley: “What kind of data is being sought and collected and used by government agencies inspired by visions of using statistics to become more efficient, leading them to try and collect more and more information and retain information for longer periods of time?” And, he added, “Once you do create a score for somebody, that score [could be] shared and used for other purposes with other government agencies or companies.” It’s conceivable, he said, that a medium or high threat score could interfere with someone’s ability to get a job.

To avoid serious civil rights issues, companies working on this technology and police departments using it need to be transparent, Stanley said. Otherwise, the public will face a risk it doesn’t fully understand. Just as the police don’t want to go into a situation without knowing the dangers, citizens don’t want to interact with police without understanding the dangers posed to them.

Your support matters…Independent journalism is under threat and overshadowed by heavily funded mainstream media.

You can help level the playing field. Become a member.

Your tax-deductible contribution keeps us digging beneath the headlines to give you thought-provoking, investigative reporting and analysis that unearths what's really happening- without compromise.

Give today to support our courageous, independent journalists.

You need to be a supporter to comment.

There are currently no responses to this article.

Be the first to respond.