AUDIO: Robert Scheer Talks With William Binney About the iPhone and Blowing the Whistle on the NSA

In this week’s “Scheer Intelligence,” Truthdig Editor-in-Chief Robert Scheer sits down with William Binney, a former National Security Agency official turned whistleblower, to discuss the fight between Apple and the U.S. government over access to Americans' cellphone data.

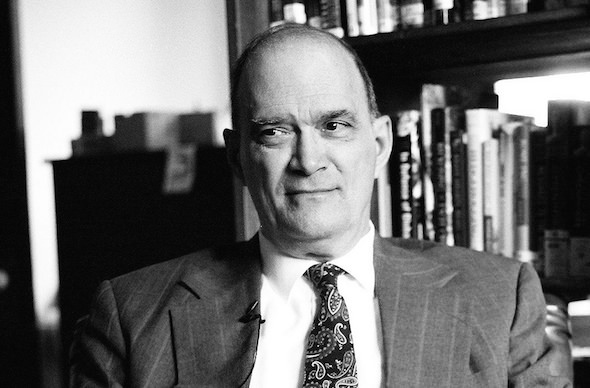

William Binney, a whistleblower and architect of key National Security Agency programs. (Jacob Appelbaum / CC BY-SA 2.0)

In this week’s Scheer Intelligence,” Truthdig Editor-in-Chief Robert Scheer sits down with William Binney, a former National Security Agency official turned whistleblower, to discuss the fight between Apple and the U.S. government over access to Americans’ cellphone data.

Binney spent over 30 years at the National Security Agency as a high-ranked official and left in 2002 after criticizing the agency’s system for collecting data on Americans.

In their conversation, Binney explains why he thinks the government is overreaching with Apple in its attempt to access data from a cellphone used by one of the San Bernardino shooters. Binney talks about how the NSA is now overwhelmed with data, doesn’t need nearly as much as it is collecting, and how there are other ways to get the data it is looking for without invading most Americans’ privacy.

Binney also discusses the ThinThread data collection system that he helped create while at the NSA, which ended prematurely, and why he believes the agency chose instead to implement the more expensive and bulky Trailblazer, later widely considered to be a failure.

Read the transcript:

Robert Scheer: Hello, this is another edition of Scheer Intelligence, conversations with people who are actually the source of this intelligence. In the case of today’s interview, it’s with William Edward Binney, a major figure in the U.S. intelligence apparatus, where he worked for more than 30 years with the United States National Security Agency, the NSA. He developed a program for going through all sorts of data, electronic data, called ThinThread; it was considered a privacy-sensitive program, one that people thought had some great effectiveness, and yet it got trampled in the pursuit to spend more money. This was all before 9/11. And instead, a program called Trailblazer was put in, and that was not efficient. And Bill Binney blew the whistle on government waste and fraud and was visited with a stark encounter with the FBI and the threat of imprisonment. But none of that came to anything; he’s fortunately a free man today and a major commentator on security issues. Bill, are you there?

William Binney: Yes, I am. It’s good to talk with you again, Bob.

RS: Hi. Listen, what I’d like to begin with is, you know, [at] the moment Apple and Apple CEO Tim Cook are being scapegoated for endangering the national security because they would not do whatever the FBI wanted in breaking their encryption code and providing access to one of these San Bernardino killers. What do you make of this whole controversy? Is it real? Is it, does our national security require breaking into our personal codes on our phone, and what’s your assessment?

WB: Yeah, first of all, I think the FBI got into the phone and changed the password and they messed it up in the process, [Laughs] and so they’re asking Apple to fix up their mistake. So, but that’s part of the problem; the real issue, though, is they want Apple to generate software that would let them go into the phone and basically figure out, do a mass attack and get the password to break in and get all the data off the phone. The problem with that is—and this is in the background—it’s really NSA and GCHQ and other intelligence agencies that want this to happen. Because what they’ve done over the years if they’ve—and recently, I think it came out a few months ago about the theft of SIM cards from a manufacturer in the Netherlands; they were stealing billions of SIM cards every year. What that means is they have, now, the little cards that you insert into your computers and phones that give you, identify you and also give you access codes. So in other words, by having that information they can access your device wherever you are, and they work worldwide. So if Apple did that, and put that code together and gave it to the government or got hacked by some other government or some hacker or something, and the code got out, then those people could access any device, any iPhone in the world anywhere through the network and attack it. So really, the whole idea here is that the FBI wants to know everything about you, and you’re not supposed to know anything about them. Now, as I recall, you know, back when our founders created this nation, I mean, I thought the whole idea was the reverse relationship was supposed to be [Laughs] what we had. That is, we were supposed to know what our government was doing on our behalf, and they were supposed to not know what we were doing unless they had probably cause to do so.

RS: Well, you know, it’s interesting you bring up the founders. Because the cheap argument that’s made by the national security establishment, in terms of security, is that the founders never faced threats that we do today. And it’s an argument that I personally find absurd; I mean, the founders, the people who wrote in the protections of the Fourth Amendment and the other amendments, you know, had just fought a war against the powerful crown of England; they would be attacked again by that same crown; they had other enemies around the world. And here was this struggling little enterprise in self-rule in the colonies, and they knew that if things went wrong, they would be hanging from the nearest tree. And yet they enshrined these protections saying, why? Because power corrupts, and even though they were going to be the power in the new government, they were worried about their own corruption by it, and they wanted the citizens to be armed against their own lying and distortion. And we get, now, we’re the most powerful nation in the world—you know that; you worked in the military ever since you were a young Pennsylvania State University graduate. You were drafted during the Vietnam era; you’ve worked in analysis and code-breaking, you know, going way back to 1965. And I think you would recognize, as I do, that this government now that spends almost the same amount as the rest of all of the world’s nations on national security is certainly in a far, far stronger position than the founders were. And maybe, you know, no government has ever been more secure, and yet they claim we can’t afford the freedoms that the founders enshrined.

WB: Yeah, that’s true. In fact, if the capacity that they had to spy on people existed back then, our founders wouldn’t have made it to first base. [Laughs] They would have been picked up right away. So, but the real point is, and one of the reasons why we have successful terrorist attacks both here and around the world, is because they’re taking in too much data. What that means is, their analysts are so buried in the data that they can’t figure out any threats. This has been published by The Intercept in May of 2015, they published an article where they were listing different—and they had the backup documents for the articles written by NSA analysts, inside NSA—this was Edward Snowden’s material. And some of the titles of it were, “killed by overflow,” or “data is not intelligence,” you know, and “buried in intercept,” and you know, all kinds of things. The “praising not knowing,” and things like that; all talking about analysts can’t figure things out because there’s too much they’re asked to do. In other words, the—

RS: Too much hay has been [collected]—you can’t find the needle—

WB: –exactly, yeah, exactly. But the consequence of that, Bob, is people have to die first before they find out who committed the crime. Then they focus on them, and they can do real well. But if you’ve noticed, every time that’s happened, they’ve always said, oh, yeah, we knew these people were bad people, and we had data on and information on them, and they were targets. Well, if that was true, why didn’t you stop them? You should have been focusing on them, instead of looking at everybody on the planet.

RS: Well, you know, that’s an important point you make that people very often ignore or don’t know about. Every single one of these cases, whether it’s the Charlie Hebdo massacre in France, whether it’s the Boston marathon, whether it’s the nineteenth hijacker in San Diego of the 9/11, who was living at an FBI informant’s home and was clearly on the radar of both the FBI and the CIA. In every one of these situations, the perps were known. They were known. So it wasn’t a question—it was a question, really, of not—not that you didn’t have enough access; you had plenty of access, you just didn’t do the old-fashioned police work of knocking on the door or checking out where they are. And I want to, the reason I wanted to talk to you about this thing—this whole magic land of encryption—is that the FBI, when it wants to break into the system, forgetting that the lessons we had under—they’re speaking from the J. Edgar Hoover building in Washington, and people should be reminded, it’s the same J. Edgar Hoover, when he was head of the FBI, who tried to destroy Martin Luther King, and planted false information on him, and was tracking him all the time. So we know that J. Edgar Hoover and the FBI have been symbols of major intrusion on freedom. But it’s interesting, their argument that comes back is oh, you don’t know how bad the enemy is, how devious they are, and we need—what did they say? Apple is acting as some kind of a terrible watchdog and not letting us at the code. You have been one of the pioneers in encryption; you’re a mathematician, you know all about breaking codes, you know all about protecting people’s freedom. And you’ve been inside the belly of the beast, if you like; you’ve spent most of your life in the national security establishment, and you’ve been praised at having functioned at a very high level, and being one of the most effective in understanding encryption and code-breaking and so forth. What do you make of this current attack on Apple, that their effort to protect their consumers, which they have to do all over the world because they’re in China, they’re in Egypt, they’re everywhere—what do you make of the argument that Apple has prevented the FBI from doing its work?

WB: Well, I just think that’s a false issue. I mean, very simply, they could go into the NSA bases, which they have direct access to, and they can go in and query the data that they want out of those bases. I mean, or they could go into any of the ISPs, telecommunications companies, and get the data there. Or they can actually go into the, scrape the cloud, the Apple cloud that they use as a backup. So there’s many ways they can do that; I mean, other than that, they could give the phone to NSA and let them hack it. You know? Or, for example, they can copy the phone thousands of times and just start trying things, and do a brute force over thousands of copies. You know, there’s any number of ways they can do things; they just, they want to make it easy on themselves, and they want to claim a false issue to get everybody to believe what they’re telling them.

RS: Well, that’s the real threat here; that’s the Orwellian threat. I mean, here’s Apple basically saying, look, we can’t function as a multinational corporation selling these phones around the world if we let you guys crack our encryption codes in this really, basically, minimal protection of the privacy of individuals. They’re going to want to do it in China and then, you know, they’re going to want to do it everywhere else where we sell phones, so you know, we have to be loyal—this is the great obligation and, indeed, contradiction of being a multinational corporation; you have to protect your consumers all around the world from their governments. Therefore, you can’t let your government just, you know, go willy-nilly into the codes. And yet those of us who have not spent our life as you have dealing with encryption, dealing with code-breaking, dealing with secrecy, tend to be intimidated by the argument that when Apple does this, they are preventing the FBI from doing its work. And you’re basically saying that’s, that’s nonsense.WB: Yeah, that is, yeah. Absolutely, I mean it’s, all they have to do is ask NSA. The problem here is that NSA data is not, supposedly not admissible in a court of law. And that’s, I guess, what they want to do, is get a source of information that would be admissible in a court of law.

RS: You mean because it’s on Americans?

WB: Right, and it’s not acquired with a warrant, yeah.

RS: Yeah. So tell us about encryption. I mean Apple has been, all of the big companies like Google and Instagram and Facebook, have made efforts to protect the privacy of consumer data for no other reason than you want to use them to pay bills, you have your financial data, you have medical data. And you know, people have to feel secure, or they’re not going to surrender all of this information in the first place. But Apple has been the most aggressive, and certainly Tim Cook deserves praise for doing this. But tell us about what Apple is doing with encryption; why it matters, why it should be protected and why the government wants to break it.

WB: Well, I think, I think the issue here with Apple is that Apple put in software that only, for example, does several things; it doesn’t, it only allows you 10 tries maximum on guessing what the password is. And if you fail 10 times in a row, it erases what data it has on the phone. And/or after that you can only put them in at a certain rate, you know; I think the rate is, you insert one and then a minute later you can insert another try, and then a minute plus a minute to the third try. And every time you try, you have to wait another minute, so there’s a delay in how fast you can put them in. And then I think they have to, you have to input them like you’re a human typing them in. There’s some kind of code to recognize the rates of typing in numbers so that you can’t have a machine just start cranking them out and inserting them really quickly on a massive scale. So those kind of things are impediments to even having the brute force attack on the passwords to get into the phone. But other than that, I don’t believe the data on the phone is itself encrypted; it’s just the idea of getting access into it. I think that’s the issue here with Apple, at least that’s my understanding of it. But encryption supposedly is supposed to protect the kinds of things that you’re saying or doing, so you have digitally encrypted voice, or some kind of algorithm-generating key that would be summed with text that you would pass around the Internet, like PGP does, or other kinds of encryption systems. But those kind of are, they give you things like fixed keys, and anytime there’s a fixed key involved with a person and they use that over and over again, that just harkens back to the old Venona system, which was the—for me, anyway, it was the diplomatic code, the Russian diplomatic code used in the late ’s and early ’s. And that was supposed to be a one-time, pad-type code system where you used something once and threw it away and never used it again. But humans being humans—and Russians are humans, of course—so they started reusing them; well, when the did that, that gave depth into that system, and that’s how they broke into it. So the whole idea is, there are ways of attacking those kinds of problems. But even today, there’s many more ways of going at it. I mean, they can actually attack your computer after you decode it and take your messages that way, or they can actually try to go in and get your keys out of your computer itself. So there’s many ways, different ways now to be able to do that, because we’re all connected on the World Wide Web. You know, it’s a wonderful thing, gives you all kinds of information worldwide, but it also gives everybody access to everybody else and everything they’ve got. So, and that’s what hackers and government agencies are doing, going in trying to get that data, then getting knowledge about everybody.

RS: So let me just set the record straight here. There’s pre-Snowden and after Snowden. And before Snowden, because he released so much information through The Guardian and The Washington Post, that it was no longer possible to deny what had already been leaking out: that the government, our government, was actively involved in collecting the data, analyzing the data, that was being passed around through this World Wide Web. And as a result, companies like Apple and Google felt pressure from their consumers worldwide, from Europe and elsewhere, of feedback saying hey, can we trust you? Can we trust your devices? In the case of China, Apple is up against Chinese-owned companies operating that are highly competitive, making phones, also doing search engines and everything else. And so multinational companies like Apple and Google are under pressure to say, in the post-Snowden world, no; we are not just rolling over for the NSA and the CIA. We are actually multinational corporations loyal to our consumers. That’s sort of the main issue, isn’t it?

WB: I think for them, yes, because they’ll lose market share if they don’t try to—if they don’t make it a convincing case that they’re actually trying to protect their customers’ privacy.

RS: Right, and they’re also making an important case for individual freedom—

WB: Yes.

RS: They’re doing it for profit motive, but nonetheless, they’re saying that you can’t really use these gadgets and the World Wide Web to do all these very personal transactions, financial transactions, medical records and everything, if the customers throughout the world don’t feel a considerable degree of privacy. Private space and certainly immunity from government surveillance, the Orwellian nightmare that the government knows everything about you. And so, in this post-Snowden world, Google and Apple and Instagram and Facebook all got together and said: “We have to push back.” And that’s where the struggle really is now, with Apple having taken the clear lead in this. And I want to take you back, as somebody who worked inside the national security establishment most of your life, for over three decades, and address this concern that the FBI and others are able to stoke, is that this privacy comes at too high a price for our national security. That we live in a world in which privacy on the Internet is a luxury we can’t afford. And that’s what Apple is pushing back on. And what is your answer as a national security professional of the highest order? No one denies that you had, you know, highest level of efficiency in ranking and knowledge of this. So what’s your assessment?

WB: Well, my basic sense of it is that this is all just nonsense that they’re saying; what they’re doing is trying to cover up for their unprofessional incompetence. They have all the data anyway already, and they knew all these people, even before 9/11 we knew who the terrorist network was worldwide. I mean, it wasn’t, there was no question; we knew all these people, and even the two that came into San Diego from Kuala Lumpur before 9/11, you know; we knew those people too, and we knew, we were tipped off by the Malaysian intelligence, also, that they were coming. And you know, the intelligence, the information is there; it’s just that they don’t have the ability to understand what they’ve got. That’s the problem. And that’s where their competence is really in question, and that’s what they’re trying to cover up by saying there are other issues that are really keeping us from protecting you. And that’s false. I mean, they have all the information necessary to protect us; they’re just not very good at it.

RS: Well, let’s address that. Because in a sense, you’re saying that Apple is a scapegoat here.

WB: Yes.

RS: That the government is going after Apple to conceal the fact of their incompetency. And so you were inside this system as deeply as one can be; take us into that world without jeopardizing your freedom. [Laughs] I understand that. And you could tell us about your own brush with the law. And why don’t you take us through that case, what happened to you; because you tried to reveal that the government was not interested in efficiency and using our money wisely to protect us; but rather, they—why don’t you tell us about your own case, and what happened?

WB: Ah, OK. Well, that was back in the 1990s; I mean, you know, I was the technical director at the time of the world geopolitical and military intelligence production. And that—so my responsibility was to look around to see all the technical problems and see what could be done to solve them for the analysts to be able to produce intelligence that would give warnings of intentions and capabilities of potential enemies. And, ah, like terrorists, or other countries and militaries and things like that. So when I took that job on in ’97, it was very clear to me that the digital explosion was causing the greatest problem, and that we had to have some way of being able to deal with that. Because the analysts even back then were flooded, and they were complaining about all the data; even back then, and we didn’t have the capability to collect all this information like we do today. We’ve had orders of magnitude improvement on the ability to collect data. And yet our ability to analyze it internally in NSA, GCHQ or anywhere else has not kept pace; in fact, it’s fallen so far behind that they’re all pretty much feeling incapable of doing the job. And this is pretty evident from the articles that have been published, and by The Intercept from the Snowden material. So that was the problem then. So we started addressing that, and the whole idea was to figure out a way to look into the massive flows of data at the time—it’s not so massive as you look back on it, but back then it was pretty bulky and a lot of information to try to wade through. The whole idea was to be able to look into it to find out what was important in that data to pull out and capture to give to your analysts, and only give them that data so they had a rich environment to be able to analyze and succeed at the jobs they were trying to do. So the idea was how you can make the content problem a manageable problem, and that’s basically what we, why we designed ThinThread, that was running—by the way, it had three different sites for almost a year and a half prior to 9/11, and they killed it in August of 2001, just before 9/11. And so it didn’t have a, it didn’t have a chance; besides, it was mostly pointed toward Asia, it wasn’t looking in the Middle East. That was the problem with it. Otherwise, we could have picked it up. But the whole idea was it was a very cheap program that cost us $3,200,000, about, to develop that from scratch and get it operational. And we had planned to, and proposed to, deploy it to 18 sites that were those that were addressing terrorism, because I went to the people in the terrorist analysis shop, and I said, what sites do you have that you get information from that’s useful in analyzing the terrorist problem? And they gave me a list of 18, and I said OK, these are our targets. And we wanted to deploy those in January of 2001.RS: This is all within the NSA, right?

WB: This is all within NSA. And they refused to—it was only going to cost 9.5 million dollars to do that, and yet they refused to do that. And they said no, we have this other program; it’s the grand scale program, it’s going to cost billions—which it did—[laughs] and this is the one we’re going with, and we don’t want any competition. So I called that—and we knew at the time, Bob, that the companies that wanted to feed on all these billions were lobbying against us in Congress. Because we had members of the congressional staffs telling us that.

RS: So this is actually still before 9/11.

WB: Yes.

RS: You develop a relatively cheap program for sifting through all this electronic data that has been vacuumed up all around the world, and to try to make sense of it. And then because of the lobbyists and their congressional allies and the people in your own agency, a much more expensive program called Trailblazer is developed. And that never worked, did it?

WB: Ah, no, actually, it failed in 2005, and they declared it dead in 2006.

RS: Yeah, so it was a complete boondoggle. How much did that end up costing, by the way?

WB: Ah, my thought was it was a little more than $4 billion. But Tom Drake said, no—he was still there when it was going on—he said no, it’s more like $8 billion.

RS: Let’s mention Tom Drake. Because Tom Drake—in your case, even though the FBI came to your house and put a gun at your son’s head and dragged you out of the shower without clothes, and so forth—there actually were no charges against you. Is that true?

WB: Yeah, that’s true. In fact, toward the end, in late 2009, they called our lawyer; we had a lawyer at the time, so … [Laughs]—and they told him that we were going, they were going to indict us. So that’s when—see, I’d been assembling evidence of malicious prosecution on the part of the Department of Justice, including fraudulent statements and malicious, outright lies to the court. And so they told our lawyer that, and then so I got on the phone, called Tom and gave him all that evidence, and threatened, and basically threatened them; I said—so that when they’d take us into court and charge us with conspiracy, which is what they were going to do, then I’ll introduce the evidence against them for malicious prosecution and go after them criminally.

RS: And what had you done in that time? Now, this is like 10 years after the fact; you had developed a very lean program that was more privacy-centered and concerned than Trailblazer, a program that people later said worked. And your wife was in the NSA also, right?

WB: Yes, she was, yeah.

RS: And I’ve interviewed Tom Drake, and for people who don’t know him, Tom Drake was working at the NSA and after 9/11—and Bill, correct me if I’m wrong on any of this—but my understanding is after 9/11, he was assigned to see if there was anything within NSA that would work to find out more about terrorists. [Laughs] And he found your program, and he thought, well, that thing works. And then when that got killed, he then went to—well, fill me in here. He went to congressional aides and so forth to—he was a whistleblower. Were you also a whistleblower?

WB: Yes. Yeah, when I found out—see, after 9/11, the president signed an order on I think it was the fourth of October. But before that they had verbally made the decision, it must have been within four days of 9/11, so because they had to order parts and all that to put the system together that they wanted to spy on U.S. citizens and everybody else. So that ordering started, you know, probably four days after 9/11. So around September 15, because the equipment started coming in at the end of September, early October. And then they had it assembled down the hall from us by the second week in October, and that’s when they started taking in all the data on U.S. citizens from AT&T. So that, basically, was the start of the Stellar Wind program, which eventually, very shortly thereafter, expanded to content. Which Mark Klein, I think, was the one who exposed the only one, only one AT&T site at San Francisco. But there are actually about a hundred other sites inside the U.S. distributed with the population, not along the coast; if they were going after foreigners, that’s where they’d be. So they’re distributed with the population, and so the target is the U.S. population. So Mark Klein exposed that one in San Francisco where they had the duplicating the fiber—

RS: He was a civilian employee of AT&T, saw a strange operation going on in the building, made inquiries and found out that it was actually a government operation.

WB: Yeah, that’s right, yeah.

RS: And so, but take me back to Drake and yourself — 9/11 happens and everything; well, the lid is off, we’re going to do everything to spy, spy, spy. And they develop this very bulky, expensive program that never works, to sift through the data. And you had this very lean program that didn’t cost a lot of money, and therefore people weren’t going to make a lot of profit from it, and that basically gets killed, right?

WB: That was their motive; they wanted to make a lot of profit, yeah.

RS: Yeah.

WB: Plus it created a lot of jobs, though; you see, what happens when government employees retire—I mean, you can see it with all the directors and deputy directors and so on of NSA and CIA, when they retire, they go to work for these beltway bandits, you know. So they’re helping to create a follow-on career, if you will.

RS: But you know, you and Tom Drake—and I’ve met Tom Drake, and of course I’ve met you, and interviewed both of you—you’re Boy Scouts. You guys are true believers in making the country safe. You—right? I mean, you guys are squeaky-clean. [Laughs] And you’ve lived your whole life in this establishment, and then Tom Drake is assigned within NSA to see what works, what can we do to meet this threat right after 9/11, go and look at what works and see what we can do. He decides your program is the efficient one, and he gets punished even more severely than you; you’re not charged with anything at the end of the day, but he is destroyed financially and, you know, faces very serious charges and ends up working, ironically, in an Apple store to try to pay his bills, right?

WB: Yeah, that’s right.

RS: Tell us about that.

WB: Yeah, in fact—well, they fabricated evidence against Tom Drake, too. They went into his house and pulled out, clearly marked unclassified by NSA, unclassified data, which were memos and things like that that he had that were related to the DoD IG investigation, that he was supposed to keep that data. So he had that, but then they saw that and they crossed out the unclassified and stamped it secret or top secret, and then accused him of having classified material. So it’s like fabricating the evidence—that’s just a basic felony; they should have been charged with a felony, the whole thing should have been thrown out of court; I mean, they should never have allowed it to begin with.

RS: Let me ask you a pointed question here. This was done under Barack Obama, wasn’t it?

WB: Ah, this one was, it started under—the actual reclassification of things, and that came under the late portion of George Bush’s term. But he didn’t take any action; the action was started when Obama took over as president, yeah.

RS: And it was Eric Holder, a guy who a lot of liberal people think is a pretty liberal guy and is out there campaigning right now for Hillary Clinton. But this is a guy who really went after Drake and went after you and has gone after more whistleblowers than any other attorney general.WB: Yeah, about three times the rest of the presidents of the United States, yeah; he’s … [Laughs] That’s how many he goes after. They want to silence whistleblowers, but what they really don’t understand is that, you know, the country was founded on principles that are nowhere near those that they’re executing. I mean, that’s the problem; all the actions they’re taking are basically unconstitutional. In fact, I call it high treason against the founding principles of this nation.

RS: OK, but, so—I just have to remind people, I’m talking to William Binney, who spent most of his adult life within the national security establishment in a very high, trusted position in dealing with code-breaking, dealing with sifting through data, and so forth. And develops a very effective program that’s been judged as so; and then another member of the NSA staff is assigned to look at what works after 9/11, says hey, this program Bill Binney’s working on is really the right way to go, not this wasteful, $4 billion program that never produces anything, called Trailblazer. And they don’t want to go with it because there’s not enough money to be made. And you guys get singled out and are persecuted, as well as, in his case, prosecuted. Why? Because you went to other people in the government, said hey, this is not right; you used the channels, didn’t you?

WB: Yeah, we used the proper channels, yeah. We went to the intelligence committees. This is what Feinstein, Senator Feinstein has always said—we should have used the proper channels. Well, we did. And that only made us visible to everybody, and they said we have to step on them. And that’s what they did.

RS: Well, when you say used the channels, what did you do?

WB: Ah, well, went to the House Intelligence Committee, to the inspector generals at the Department of Defense and the Department of Justice and to other members of Congress. Those are the channels you’re supposed to use when you have an issue. And we also got a DoD IG investigation of NSA because of our complaint, and it, of course, verified everything we said; and actually, it documented that the effectiveness of the ThinThread program—it’s all been redacted out of it, because it’s too embarrassing to NSA, you know. So they’ve redacted it and hidden it, even though the paragraphs that they’ve redacted, about 80 percent of the redactions are on unclassified paragraphs. [Laughs] And the reason they do that is because it just makes them look like they’re really corrupt and fraudulent, wasteful of money and defrauding the public, and actually not—and I basically accused them, and I do that publicly, of trading the security of everybody in the United States and the free world for money.

RS: So, there we—[Laughs] Let’s just start with that statement because it takes us back to the Apple case. I mean, here’s this private, profit-making company that needs to service our phone needs and so forth; it is a multinational company and says, look, we can’t take care of our customers worldwide if we can’t protect their privacy to some degree, some significant degree, against their government and any government. There’s got to be, you know, some protection here. And they are scapegoated for this; they’re made to seem an enemy, they’re threatening our security by the charges made by the head of the FBI. You were inside this national security establishment. And if I understand you correctly, you’re saying that what Apple has done has not made us less secure; that they have plenty of data, they just don’t know how to look at it, and they don’t do it efficiently. Is that a fair statement?

WB: Yeah, that’s fair, yeah.

RS: OK. And then you do what, you know, when people like Hillary Clinton and Dianne Feinstein and plenty of other people who are supposed to care a great deal about our freedom and our rights, and are on the more liberal side of things, or at least Democrats—they tell us Edward Snowden should have used the channels. And other whistleblowers should use the channels, you know. And you and Thomas Drake used the channels; you were the good scouts. And as opposed to Edward Snowden, who was a contractor, you were a government employee, and so you did have some whistleblower protection. But that whistleblower protection turned out to be meaningless.

WB: Actually, in the intelligence community, there was very little whistleblower protection at all. Up until, I think, about two years ago when President Obama started putting together some level of protection. But still, even today, that, from what I can see, that protection level is virtually nonexistent. I mean, if they want to go after you, they go after you, that’s all.

RS: So here we’re having a debate now about, say, my—I got it right here, my iPhone 6 Plus. [Laughs] And there’s all sorts of people, whether they’re investigating ordinary crime, or national security, say they want to break into this phone. And you’re saying, first of all, they have so many ways to get this data in my phone, this is a fiction anyway.

WB: Yes.

RS: And they certainly, if they would go through channels and get warrants, they could do it even with constitutional protection. And so why are they picking this fight with Apple? What is your assessment?

WB: Well, it’s getting back to what I think I started out with. For example, let me use your phone. Suppose you take your phone and you’re driving down the streets in L.A., or you take a trip somewhere around the world or something. They probably already have the SIM card that’s in that phone, which means they can access your phone directly through the network. So that if Apple developed software that would allow them to break in to get past that password you have, protecting your data on that phone, then they could remotely dial in and do that, and break into your phone as you moved around, and take all that data off your phone without you knowing. So that’s the point; that seems to me to be their point, that’s really what they want to do with all the iPhones in the world.

RS: So that, and that’s something the Chinese government could do, the Egyptian government?

WB: Yeah, sure, yeah.

RS: So, I mean, here we—our president has condemned the Chinese for breaking into phones and so forth. And yet here’s an American company that is trying to be trusted throughout the world, and have its products trusted. And basically you’re saying that it’s almost laziness on the part of these government agencies, who have so much access to data that they can’t even comprehend, that they feel they want to go even further and make it—have even the minimal protection that Apple is offering, to break that. Is that, would you say, correct?

WB: Yeah, that’s right. I mean, they also have plans, like the British do, bulk acquisition of data; they also have a program to bulk attack, massively, large numbers of phones or large numbers of computers. So it’s like hacking in on a massive scale in a given area, for example. And so that’s the kind of thing that they could do if they had that kind of software. First of all, you know, usually the government, when they ask a company to do a job, they usually issue a contract to them and pay them to do it. [Laughs] But here, they’re just telling them to do it because of the court order. Well, you know, they don’t have to comply to that; I mean, they can’t be ordered to do a job without getting paid for it. That’s the point too, you know; that’s another aspect of it. But the idea is, they want to take that software and be able to, you know, attack any phone or groups of phones anywhere in the world; simultaneously attack them, you know, and, or maybe just cycle through them, making sure they still have the right passwords.

RS: So, I’m talking to Bill Binney, someone I’ve interviewed before, who was one of the major figures to come out of the intelligence community at a very high level and tell us, really, how dangerously screwed up this thing is. Let me ask you, the sort of subtheme of this series are American originals; people who break the mold and let us in on how things work in a way that exhibits courage. And certainly that’s been your case, and the case of Thomas Drake and others. But they’re only a handful; we only have a small number, 10, 20, 30 whistleblowers have really told us what’s going on. The rest go along, go along. Now, you got into this to make us safer, right?

WB: Yes, I did—

RS: Got out of college, you went into the Army, the Vietnam era and so forth, you’ve devoted your whole life to making us safer. What is your assessment about these assaults on our freedom, and have they made us safer? Is there a trade-off? How is it working? What’s going on? What’s the bottom line here?WB: Well, first of all, you know, there’s no need to trade security and privacy; there’s no trade-off necessary. I mean, you can have both. That’s the whole point; that’s what we demonstrated with ThinThread, and that’s been documented in a DoD IG report. And that’s why it’s all been redacted; they don’t want you to know that. Again, because if they recognize that the problem had already been solved by a very targeted professional approach, disciplined approach, then they didn’t have the problem of velocity, variety and volume in the digital age to get money from Congress. That’s why they had to get rid of us and kill the program, so that, because it had to be out of sight, so nobody knew that that was possible. And that’s fundamentally what they’ve done; they’ve been very clear to hide that knowledge and keep it out of the public domain so nobody knows that there is, really, another alternative. So my point is that that’s, the money is the motivation that’s been their motive from the beginning, and it still continues to be. I mean, the budget for the intelligence community has almost tripled; I mean, there are over—my estimate is there are over $100 billion a year now for the intelligence community alone. And I use the Garland, Texas, attack as a very clear example. Those people were known even before the attack at the time, too, and the Garland police were tipped off two days in advance of that attack by a member of Anonymous. Not by the government agencies supposing to protect us. Right? Their bulk acquisition of data wasn’t, they weren’t able to find the information that would have alerted them that that was going on, yet some member of Anonymous found it in social networking that they were watching, some people were talking about doing a terrorist attack, or doing terrorism or something. And then when they said what they were going to do, they notified the Garland police that that was going to happen. And that was two days before the attack. So that’s the whole purpose of intelligence; to produce intentions and capabilities of potential adversaries. That’s the whole idea of intelligence. It’s not a forensic job after the fact, after people get killed; that’s a police job, and that’s basically what intelligence has been reduced to, because of all this bulk acquisition and bulk data collection. And they just can’t get through it. And so they’re reduced to following up after an attack and, you know, putting the pieces together of a forensics job.

RS: So just so we have a takeaway for someone listening to this podcast, to conclude it, Bill, I just want to be clear. You, a national security veteran of over 30 years, are asked by your government at the highest level to figure out, how can we sift through electronic data, basically, that’s circulating through this World Wide Web and so forth, in a way that makes us secure. That’s your main goal. And you come up with a program that now the inspector general and others have agreed, ThinThread, was a program that was efficient and had minimal attack on one’s privacy; was efficient in sifting through data, not overwhelming us with data we can’t analyze; and that a fellow named Thomas Drake, who was in your own agency, who was assigned to see after 9/11 what worked, discovered that your program worked. And instead of going with that program, which could also protect our privacy and yet at the same time make us secure, in order to get $4 billion worth of contracts, they go for something called Trailblazer that never works. And they end up collecting ever more data that they can’t understand; they’re, as a result, not making us more secure, but rather less secure. And we then come to the day where Apple is the enemy in the eyes of the FBI and the NSA for trying to give us minimal protection, and the government’s claim that it must break through the code and protections that Apple has in order to make us secure is totally bogus. Is that a fair judgment, and—

WB: Yeah, I think you’ve pretty much put your hand on it, right. That’s exactly what’s going on. And it’s basically a war of disinformation; they’re trying to hide the facts of the truth of what they’re doing, and also how they operate, and then their effectiveness in that. We’re not getting a good return on investment on all that money we’re pumping into the intelligence community. One of the first things I would suggest is that if there’s an attack and they fail to stop it or to alert us before it happens, that we ought to start cutting their budget, and for every attack they should lose 10 percent of their budget. [Laughs] Start cutting it. And then see how they wise up.

RS: So, finally, Apple’s the good guy and—

WB: Oh, yeah. Yeah.

RS: –the NSA’s the bad. Yes?

WB: Yeah, absolutely.

RS: OK. [Laughs]

WB: That’s why I refer to NSA now as the new Stasi agency.

RS: Stasi as in the German secret police of East Germany, at the worst moments of—

WB: As in the German—yeah—I’ve gotten a lot of flak from different people over in England, too, about that. And I said, well, look at what’s going on. The Stasi had these—I went through the Stasi museum over there, which was Stasi headquarters with all the files and everything. And there’s just row after row of all these folders on individuals and all the handwritten and paper information about them that was stored in these little folders. Well, NSA has all this digitally stored. So the difference is, it’s digitally stored, it’s more complete, it’s more timely, and it’s in a much more minable storage process. So I call them the new Stasi agency. And I always refer back to Wolfgang Schmidt, a former lieutenant colonel in the East German Stasi, who said about the NSA program, he said, “For us, this would have been a dream come true.”

RS: Ah. What a note to end. Thank you, Bill Binney, a true American hero as well as an American original. And you know, too bad we don’t have more whistleblowers like you.

WB: Yeah.

RS: Take care.

WB: Thanks, Bob.

Independent journalism is under threat and overshadowed by heavily funded mainstream media.

You can help level the playing field. Become a member.

Your tax-deductible contribution keeps us digging beneath the headlines to give you thought-provoking, investigative reporting and analysis that unearths what's really happening- without compromise.

Give today to support our courageous, independent journalists.

You need to be a supporter to comment.

There are currently no responses to this article.

Be the first to respond.